For your website to show up in the Google results (SERP), Google must first index it. If your site is new, then Google doesn’t know it exists at all. In this blog post we will talk about a few methods to let Google crawl your website.

In our last blog post on how often Google SERPs change, we talked about crawlers and briefly mentioned Google Search Console, sitemaps and robots.txt. Using those latter three things, you can let Google know to crawl your website.

Google Search Console

Google Search Console is a tool by Google to analyze your search traffic, troubleshoot issues with your website and getting useful reports.

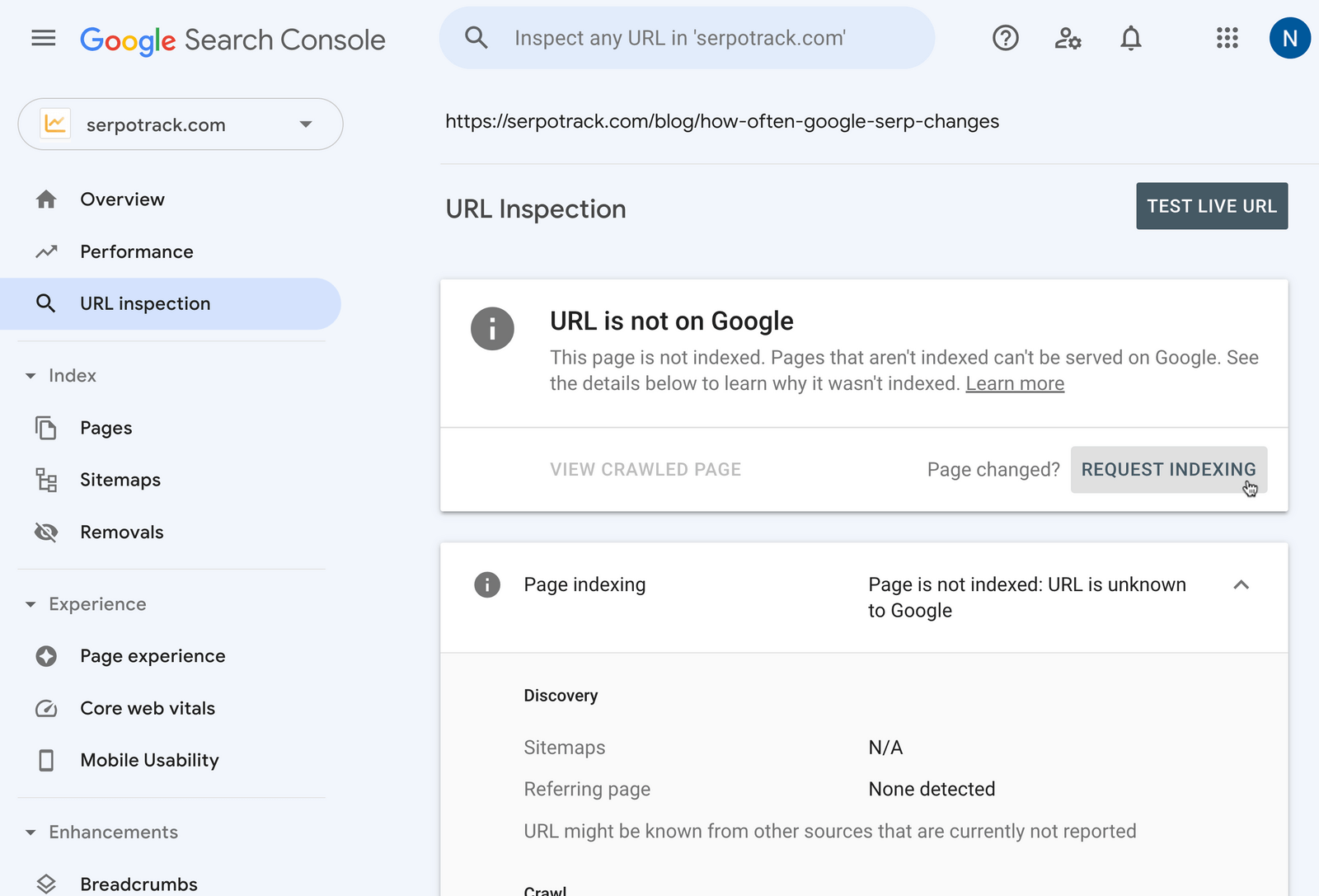

When you add your website to Search Console, you can submit URLs that Google will crawl. Initially, you can just enter your home-URL in the top search bar. Search Console will then show the URL Inspection for that URL. There you can click the “Request indexing” button.

If all pages on your website are accessible through on-page links, then the crawlers will automatically detect your other pages. In that case there is no need to submit each URL. But if your pages aren’t all accessible via links, then you can submit those manually. However, if you have a large website, then this is quite time consuming and inefficient. In that case it might be better to use sitemaps.

Sitemap

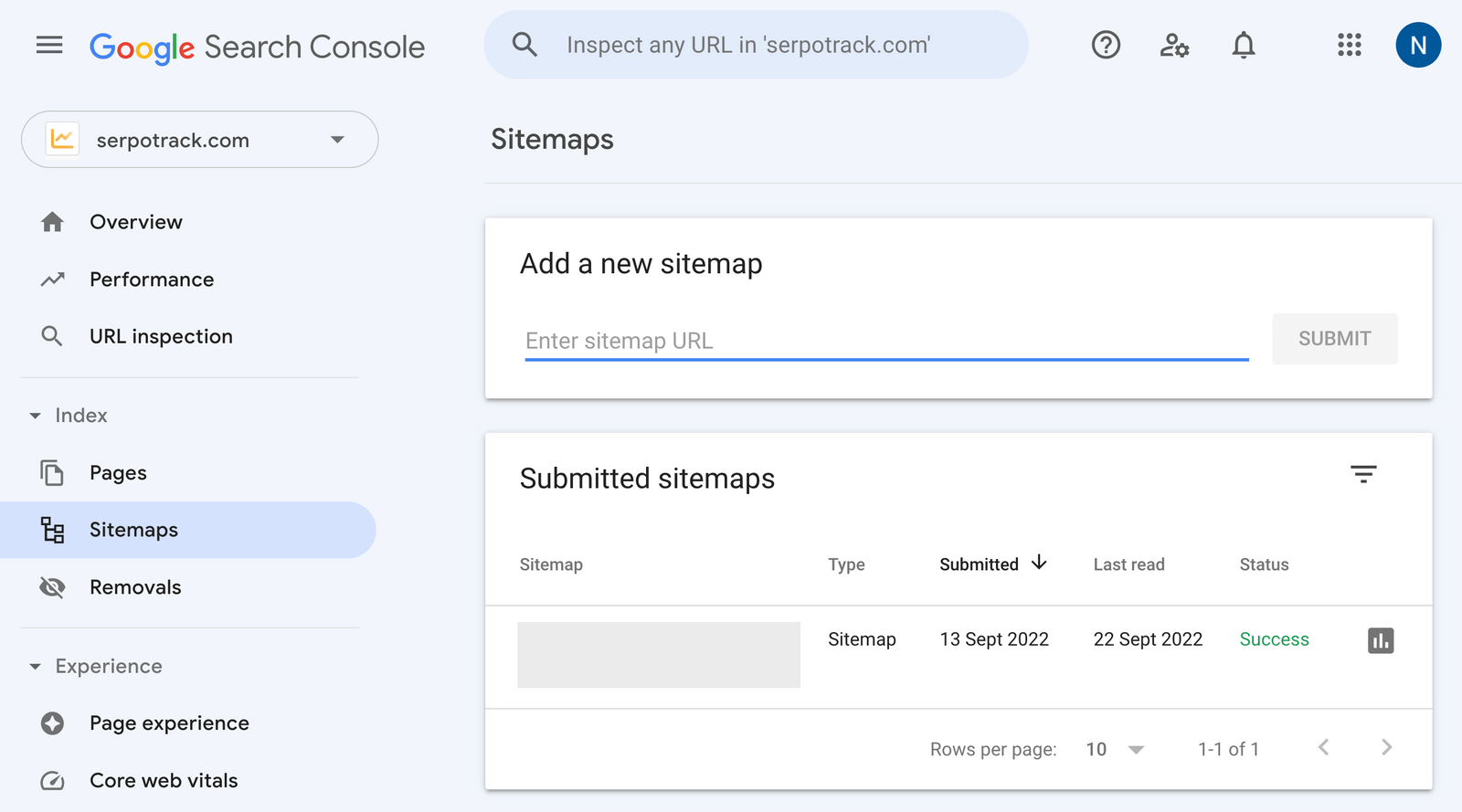

A sitemap is an overview of pages on your website. It’s an XML-file that you host that (usually) automatically contains all your public URLs. If you use WordPress or other similar solutions, there are plugins to automatically generate sitemaps. With your sitemap you can let Google exactly know what URLs your website has. Google re-reads your sitemap(s) regularly. That way new URLs can be (dynamically) added to your sitemap.

From your Google Search Console dashboard, you can simply submit your sitemap. Note that you can add multiple sitemaps.

Robots.txt

As an alternative to sitemaps, you can use a robots.txt file to let Google know that URLs they can crawl. But you can also use robot.txt to let crawlers know where they can find your sitemap. Here on Google Search Central you can find more detailed information on how to use robots.txt.

You don’t have to submit your robots.txt to Google Search Console, instead web crawlers automatically look for a robots.txt file on your website, which resides in the root, for example: your-website.com/robots.txt.

Conclusion

To actively let Google know that your website needs to be crawled, you can add it to Google Search Console and optionally submit a sitemap. By doing so, you let the crawlers know what URLs can be crawled. Eventually, if everything goes well, your website will be added to the Google index. That way your website can show up in search results.

Note that the methods mentioned in this blog post aren’t the only ones, but they are important basic ones. Check out Google Search Central for more details about crawling and indexing.